Microsoft ends support for Internet Explorer on June 16, 2022.

We recommend using one of the browsers listed below.

- Microsoft Edge(Latest version)

- Mozilla Firefox(Latest version)

- Google Chrome(Latest version)

- Apple Safari(Latest version)

Please contact your browser provider for download and installation instructions.

May 7, 2024

NTT Corporation

Realize the World's First "Learning Transfer" to Reuse Past Learning Trajectories, Significantly Reducing the Cost of Retraining AI Models

Easy upgrade and replacement of foundation models such as NTT's LLM "tsuzumi"

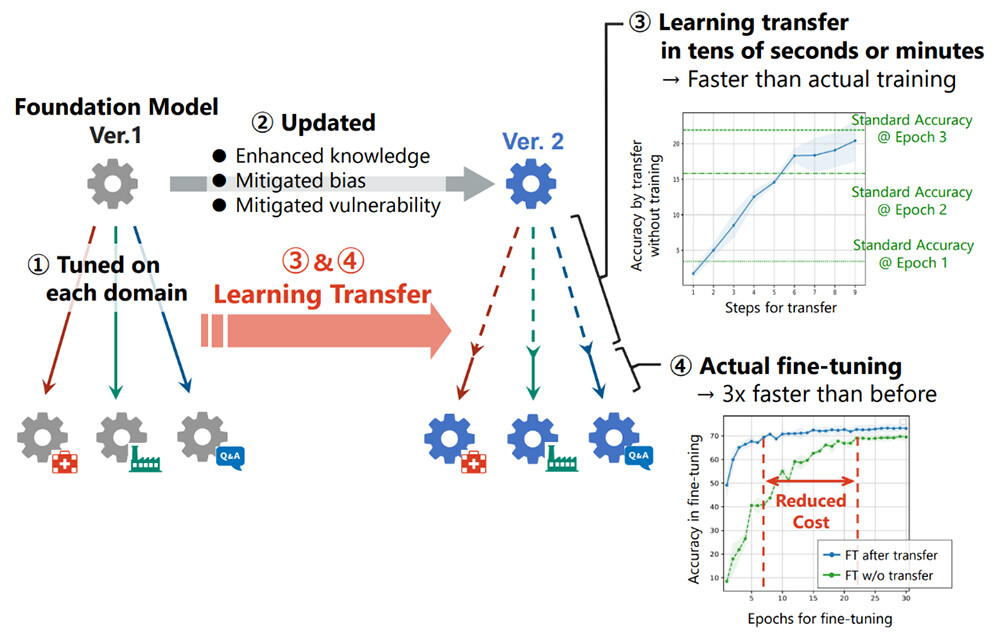

Tokyo - May 7, 2024 - NTT Corporation (NTT) has realized "learning transfer" technology as a completely new mechanism which reuses past learning trajectories between different models in deep learning. This technology enables low-cost learning results for new models by exploiting the high symmetry in the parameter space of neural networks and by appropriately transforming the parameter sequence of past learning trajectories. The cost of re-training (hereafter referred to as "tuning") the foundation model1 for periodic updates, which is essential when using a large-scale foundation model such as a generative AI with tuning for each application, can be significantly reduced. This is expected to contribute to easier operation of generative AIs, expansion of application areas, and reduction of power consumption. The paper detaining these results will be presented at the International Conference on Learning Representations (ICLR) 20242, the top conference in the field of AI, to be held in Vienna, Austria, from May 7 to 11, 2024.

1. Background

In recent years, diverse and large-scale foundation models such as generative AIs have become available. In order to meet the requirements of individual companies and organizations, it has become common practice to tune the foundation models through additional learning on individual data sets. However, this tuning requires tuning when updating the underlying model or changing to a different model, resulting in significant computational costs. For example, when the foundation model itself is updated to improve basic performance or to address vulnerabilities such as copyright and privacy issues, it is necessary to re-tune all the models that were obtained by tuning the model. In addition, since there are a variety of foundation models available in the market, when a developer wants to change the base foundation model to improve performance or reduce cost, the developer must tune it again on the new foundation model.

These tuning costs cannot be ignored when using generative AIs, and are expected to be a major barrier to the future adoption of generative AIs.

2. Research Results

In general, in deep learning, learning is performed by sequentially optimizing the parameters of a neural network model by feeding a training data set. The history of parameter changes during learning is called the learning trajectory of a model, and it is known to be strongly affected by the initial values and randomness in the learning process. Meanwhile, how the learning trajectory differs or is similar between models with different initial values and randomness has been unclear, so that this information has not been exploited across different models.

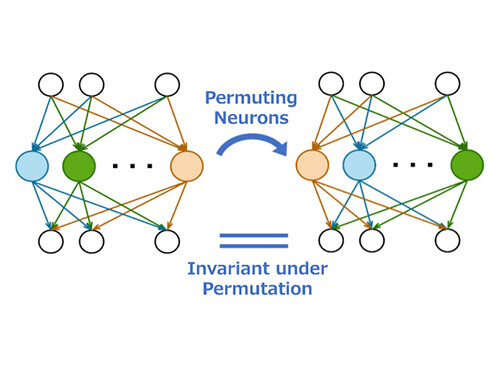

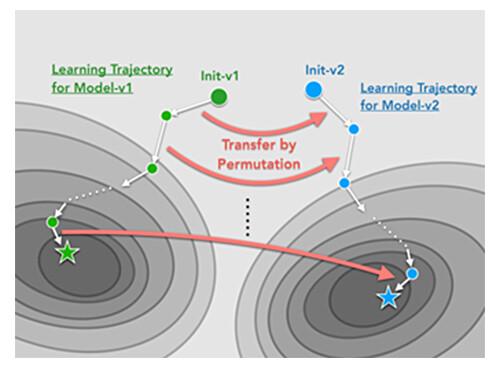

NTT focused on a high degree of symmetry in the parameter space of neural networks and discovered that the learning trajectories of different models can be approximately identical to each other under the symmetry of neuronal permutation, called the permutation symmetry3 (Figure 1). Based on this discovery, the "learning transfer" technology was proposed and demonstrated, where the past learning trajectory can be reused as the learning trajectory of a new model by transforming it with an appropriate permutation symmetry, as shown in Figure 2.

-

Figure 1 Permutation Symmetry

-

Figure 2 Learning Transfer

In learning transfer, a certain level of accuracy can be achieved with only low-cost transformations, without expensive learning. Moreover, Figure 3 shows that the additional learning after the learning transfer achieves faster convergence to the target accuracy than the standard learning.

Figure 3 Learning acceleration when updating the base foundation model

Figure 3 Learning acceleration when updating the base foundation model

3. Outlook

This achievement established the fundamental theory of a new learning method in deep learning. For a practical use case, the possibilities of reducing the tuning cost of updating or changing the foundation model are identified.

This will contribute to the development of next-generation AI technologies, such as reducing the operating costs and environmental impact of various foundation models, including "tsuzumi"4, a large-scale language model (LLM) developed by NTT, and realizing the concept of the "AI Constellation"5, which aims for diverse solutions through discussion among various AIs.

1Foundation model

Foundational models are AI models trained with large amounts of data and serve as the basis for training various domain-specific models.

2ICLR 2024

One of the top conferences on artificial intelligence

URL: https://iclr.cc/Conferences/2024

3Permutation symmetry

Transformations that change parameters of neural networks by permuting neurons, but does not change the final output.

4tsuzumi

NTT's large language model. A language model that enhances the ability of understanding and text generation in Japanese and is trained on a large amount of text data.

URL: https://www.rd.ntt/e/research/LLM_tsuzumi.html

5AI Constellation

AI constellation is a large-scale AI collaboration technology in which AIs mutually discuss and correct each other and outputs solutions from diverse perspectives by orchestrating various AI models such as LLMs and rule-based models. For details, please refer to the following press release.

URL: https://group.ntt/en/newsrelease/2023/11/13/231113b.html

About NTT

NTT contributes to a sustainable society through the power of innovation. We are a leading global technology company providing services to consumers and businesses as a mobile operator, infrastructure, networks, applications, and consulting provider. Our offerings include digital business consulting, managed application services, workplace and cloud solutions, data center and edge computing, all supported by our deep global industry expertise. We are over $97B in revenue and 330,000 employees, with $3.6B in annual R&D investments. Our operations span across 80+ countries and regions, allowing us to serve clients in over 190 of them. We serve over 75% of Fortune Global 100 companies, thousands of other enterprise and government clients and millions of consumers.

Media contact

NTT Service Innovation Laboratory Group

Public Relations

nttrd-pr@ml.ntt.com

Information is current as of the date of issue of the individual press release.

Please be advised that information may be outdated after that point.

NTT STORY

WEB media that thinks about the future with NTT