Microsoft ends support for Internet Explorer on June 16, 2022.

We recommend using one of the browsers listed below.

- Microsoft Edge(Latest version)

- Mozilla Firefox(Latest version)

- Google Chrome(Latest version)

- Apple Safari(Latest version)

Please contact your browser provider for download and installation instructions.

March 4, 2022

Demonstration of Ultra-Low Latency Screen Split Display Processing Technology for Real-time Remote Sessions

Enhancing Performance Style with Sense of Unity among Players in Distant Places

NTT Corporation (NTT) has demonstrated an ultra-low latency split display processing technology for multiple video streams that, by utilizing low latency video transmission feature of the IOWN concept, transmits video streams mutually between remote locations without perceptible time lag, enabling video communication services such as remote orchestral ensembles. A demonstration experiment was conducted on an experimental network system that transfers video captured at Musashino, Yokosuka, and Atsugi performance sites with screen split display processing in a stream-type method. The experiment confirmed that the remote ensemble performance was achieved without the stress of video delays.

1. Background

The global spread of the novel coronavirus has led to significant changes in our lifestyles and the dispersion of various social activities, such as remote work. In the field of music entertainment, there is a growing need for real-time remote sessions over networks that eliminates the need for performers to gather in the same location.

NTT has been active in R&D to realize realistic remote performances by developing technologies to synchronize video and audio streams transmitted from separate locations and then broadcast the result live to viewers. This time, focusing on improving the sense of unity between the performers as they play, our R&D activities have yielded a remote communication system that enables the performers to exchange videos without any discernible delay by utilizing significant attributes of IOWN.

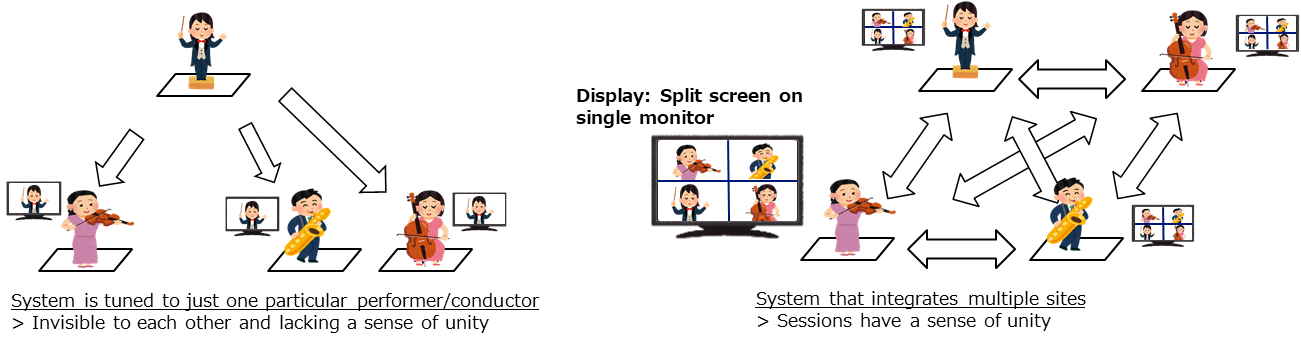

In order to realize a real-time remote session, the timing of the performance must be harmonized by seeing not only the conductor, but also the movements of the other players. To achieve this, it is important to exchange not only audio but also video between multiple locations without delay (Figure 1). However, in conventional Internet-based web conferencing, which is often used for remote work, video transmission delays occur due to best-effort quality networks. Moreover, there are large delays in processing to decrease the size of video from each location and in forming split screen display (screen split display processing). This makes it difficult to match the timing of the other musicians, making effective remote sessions difficult to realize.

Figure 1 Matching of playing timing for integrated real-time remote sessions

Figure 1 Matching of playing timing for integrated real-time remote sessions

2. Technical Point

NTT proposed the IOWN concept as a next-generation communication platform. One of the features of this concept is the realization of low-latency transmission through a system that uses optical technology to transmit information without compression, as one example. In addition, unlike conventional best-effort quality networks, it enables transmission without delay fluctuations, enabling network systems and user experiences to be designed with an understanding of delay behavior.

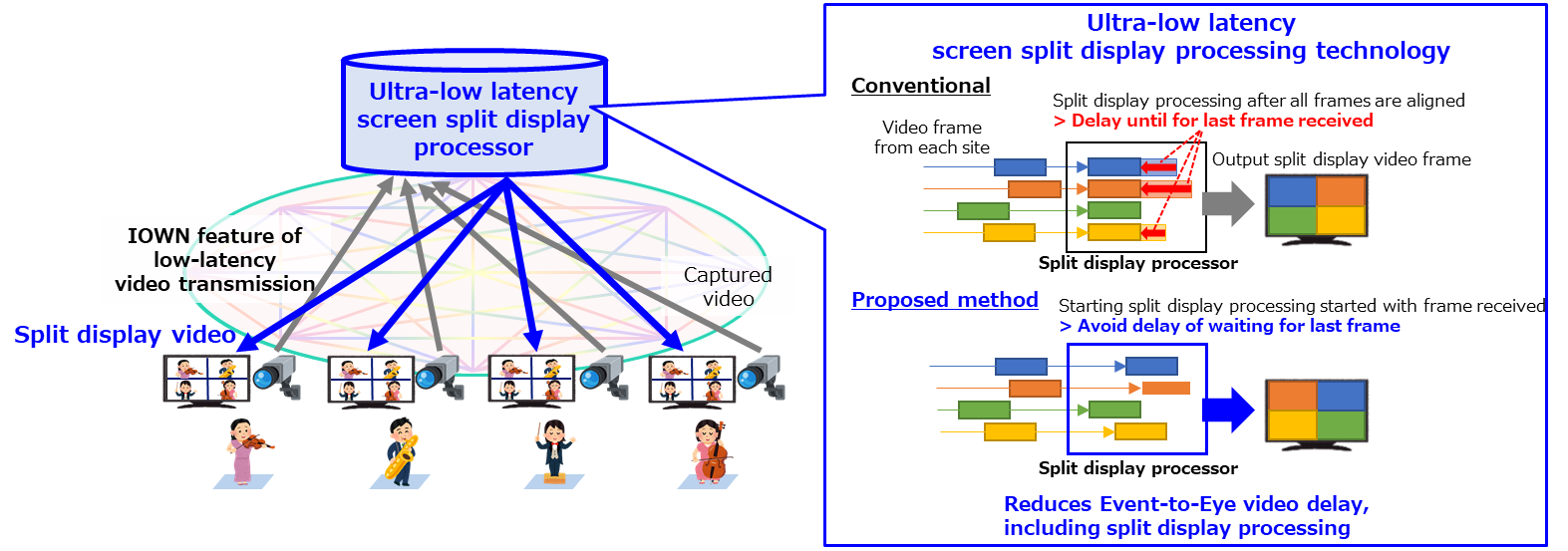

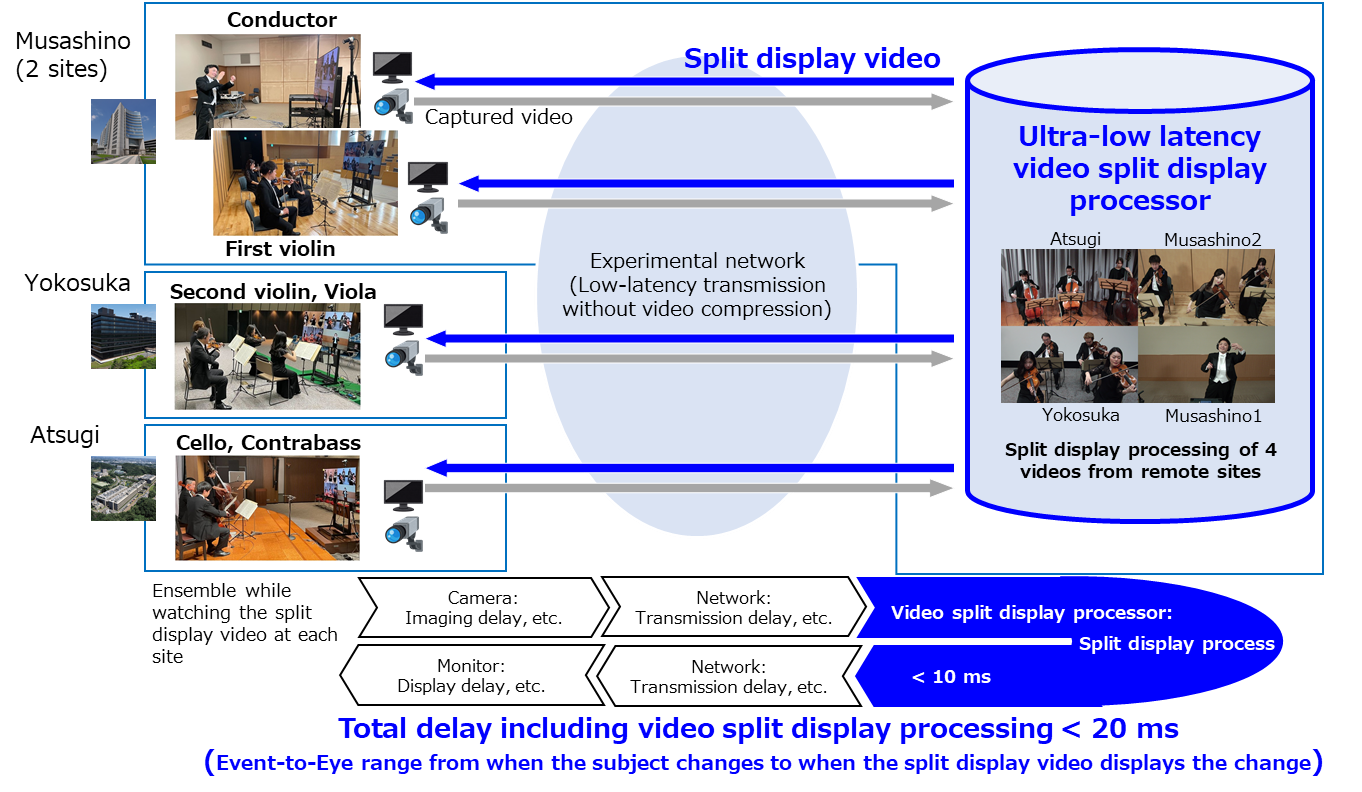

By taking advantage of this feature, we have realized a technology to form split screen from multiple video streams delivered from multiple locations with ultra-low latency (Figure 2). This makes it possible to reduce Event-to-Eye (from the subject changes until the changes is displayed) video delays, including split display processing, without sacrificing the low latency features of the All Photonics Network (APN), and to configure a system that allows multiple remote locations to exchange video without perceptible time lag.

This ultra-low latency screen split display processing technology reduces frame waiting latency and achieves ultra-low latency video output by using a stream-type processing technology that outputs split display video while controlling the screen layout in the order in which it arrives, rather than processing video from each location frame by frame. We have confirmed that this split display processing technology can be realized by FPGA (Field Programmable Gate Array) based hardware. Delay of less than approximately 10 milliseconds from the time four asynchronous HDMI video streams are input to the device until the split display is output from the device.

Figure 2: Remote video communication using ultra-low latency screen split display processing technology in IOWN concept

Figure 2: Remote video communication using ultra-low latency screen split display processing technology in IOWN concept

3. Demonstration experiment

In order to confirm the effectiveness of this technology using an actual remote ensemble, we constructed an experimental network system between NTT's three R&D centers in Musashino (two remote locations), Yokosuka, and Atsugi. HDMI videos captured at each performance site were sent without compression, and the split display was formed and transmitted to each performer* (Figure 3). When we measured the delay characteristics of this experimental configuration, we confirmed that the system using our technology reduced the overall delay of Event-to-Eye including split display processing to approximately 20 milliseconds or less. This is much shorter than the hundreds of milliseconds of the conventional Internet-based web conferencing.

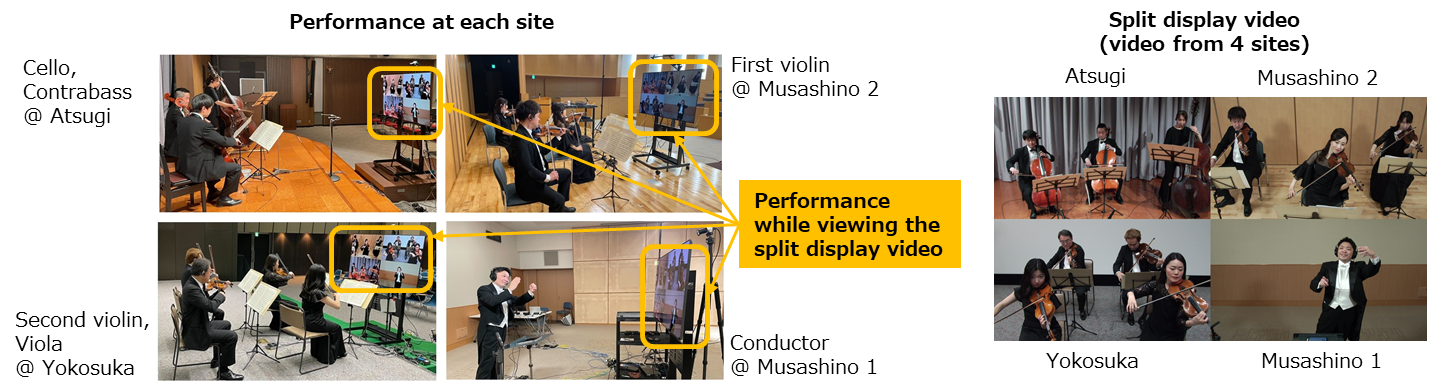

In the experiment, a conductor (Musashino 1), first violin (Musashino 2), second violin and viola (Yokosuka), and cello and contrabass (Atsugi) performed remotely while viewing the split display on a monitor at each location.

*With the cooperation of Japan Philharmonic Orchestra and Maestro Daisuke Nagamine (conducting)

Figure 3 Outline of the Demonstration Experiment

Figure 3 Outline of the Demonstration Experiment

First, for comparison, when the remote ensemble played in an environment simulating high video delay (150 ms), there was a time lag between the split display video and the actual performance, so the video timing of the performance did not match between locations. This made it difficult to infuse the ensemble with a sense of unity. On the other hand, by using our technology, we were able to confirm the movement of players in other locations in real time based on the split display video. The experiment confirmed that the session was played without gap in performance timing (Fig. 4).

Furthermore, when we interviewed performers about their experience with the remote ensemble performance, we received comments such as "I did not feel any video delays" and "It was easy to perform because I was able to check videos from multiple locations at the same time."

As described above, we have confirmed that this technology can be used to realize harmonized performance without the stress of video delays.

Figure 4 Experimental results

Figure 4 Experimental results

4. Future development

By further evaluation of this demonstration experiment, we will advance our research and development activities to realize a new style of remote video communication that provides a sense of unity without perceptible delays even in remote locations. In addition, we will work with NTT Group companies to consider business feasibility and other aspects to encourage early commercialization.

Contact Information

NTT Information Network Laboratory Group

Planning Department, Public Relations Section

E-mail: inlg-pr-pb-ml@hco.ntt.co.jp

Information is current as of the date of issue of the individual press release.

Please be advised that information may be outdated after that point.

NTT STORY

WEB media that thinks about the future with NTT