Microsoft ends support for Internet Explorer on June 16, 2022.

We recommend using one of the browsers listed below.

- Microsoft Edge(Latest version)

- Mozilla Firefox(Latest version)

- Google Chrome(Latest version)

- Apple Safari(Latest version)

Please contact your browser provider for download and installation instructions.

April 11, 2025

NTT Corporation

NTT has developed an AI inference LSI that enables real-time AI inference processing from ultra-high-definition video on edge and terminals

Extending AI inference resolution constraints to 4K for real-time, low-power operation

News Highlights:

- NTT has developed an AI inference LSI that enables real-time AI inference processing from ultra-high-definition video such as 4K on edge and terminals with strict power constraint.

- For example, when this LSI is installed on a drone, it enables infrastructure inspection while the drone navigates safely and beyond visual line of sight, helping to reduce labor and costs.

- NTT Innovative Devices Corporation plans to commercialize this LSI within fiscal year 2025.

TOKYO - April 11, 2025 - NTT Corporation (Headquarters: Chiyoda Ward, Tokyo; Representative Member of the Board and President: Akira Shimada; hereinafter "NTT") has developed an AI inference LSI1 that enables real-time AI inference processing from ultra-high-definition video such as 4K on edge and terminals with strict power constraint.

In recent years, the use of video AI has become increasingly important to improve the efficiency of operations and production. In particular, in edge and terminal applications such as beyond-visual-line-of-sight (BVLOS) drone flight or people flow analysis in public spaces, there is increasing need for the use of video AI. On the other hand, conventional technologies have resolution and throughput challenges. Our developed LSI extends the AI inference resolution constraint to 4K, while operating in real-time and at low power. For example, when this LSI is installed on a drone, the drone can detect passersby and objects such as car over a wide area from 150m above the ground2 (conventionally around 30m), which enables infrastructure inspection while the drone navigates safely and beyond visual line of sight, helping to reduce labor and costs.

This research result will be exhibited at Upgrade 20253, which will be held in San Francisco, USA on April 9-10, 2025.

Background

With the advancement of deep learning technologies, it is becoming increasingly important to use video AI technologies to improve the efficiency of operations and production. In particular, edge and terminal applications such as BVLOS drone flight, people-flow/traffic analysis, and automatic subject tracking require detecting a wide range of objects with a single high-definition camera such as 4K in real time and at low power.

In edge and terminal applications with strict power constraints such as those described above, AI devices (tens of watts) with power consumption over an order of magnitude lower than GPUs (hundreds of watts) used in servers are employed. On the other hand, in general, the input image size of AI inference is limited to suppress the computational complexity and the training process difficulty. Therefore, even if the video was captured by a 4K camera, the image was actually reduced to a smaller image size for AI inference, which meant that small objects were crushed and difficult to detect.

Research Results

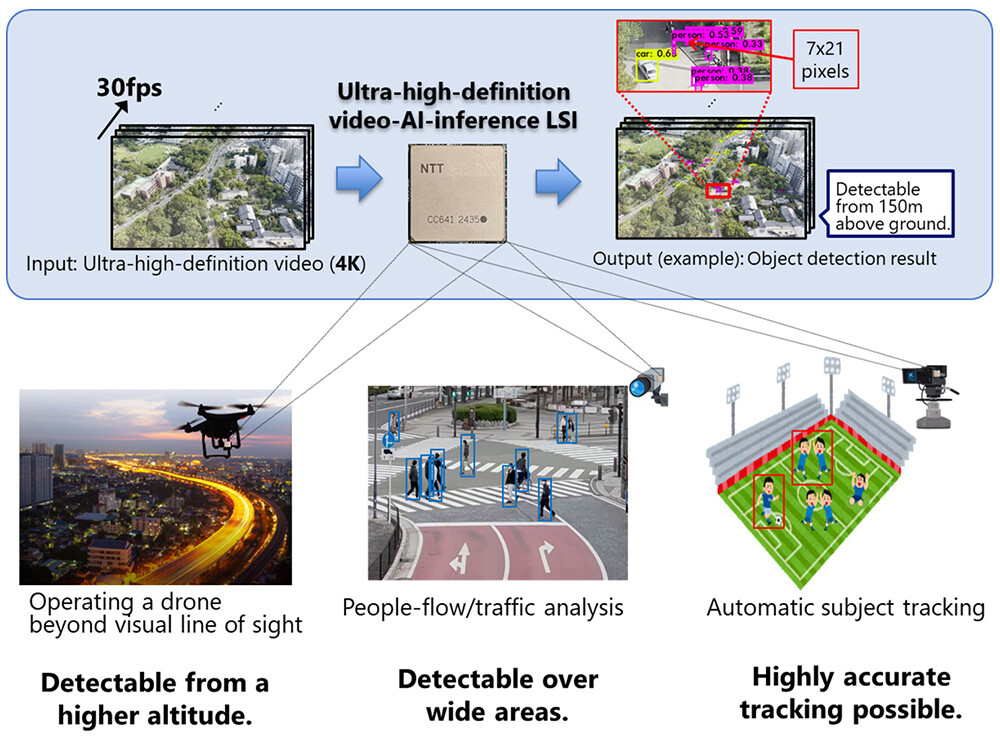

To solve the above problems, NTT has been working on research and development of high-definition video-AI-inference hardware and has now developed an AI inference LSI that enables 4K real-time AI inference processing (Figure 1). When YOLOv34 is executed using this LSI, real-time object detection processing (30 fps) at 4K resolution is possible with the same or lower power consumption (less than 20 watts) than that of object detection processing at reduced resolution (608 × 608 pixels) with a general edge and terminal AI device. As a result, for example, when this LSI is installed on a drone, it is possible to check the presence of passersby and objects such as car with AI inference under the flight path, which is necessary for safe navigation outside the visual line of sight even from an altitude up to 150m. In addition, this LSI also enables detection over a wider area for people-flow/traffic analysis services in public spaces, and enables more accurate tracking in automatic subject tracking.

Figure 1 Overview of Ultra-High-Definition Video-AI-Inference LSI

Figure 1 Overview of Ultra-High-Definition Video-AI-Inference LSI

Key Technical Details

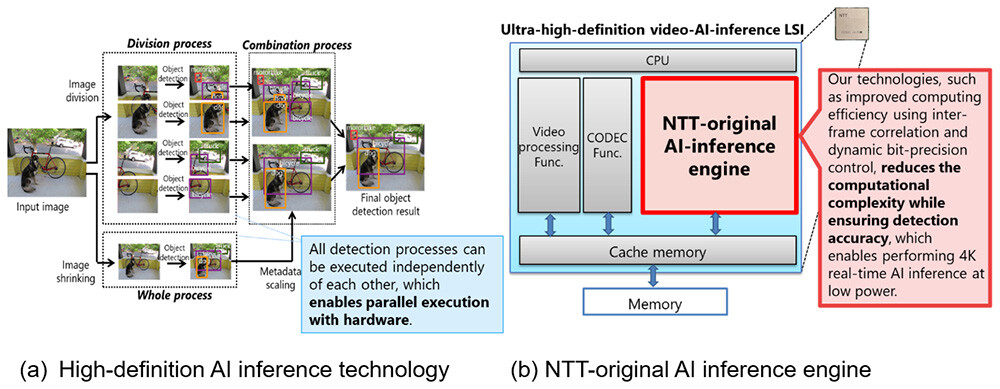

(1) High-definition AI inference technology

The basic processing flow of this technology is shown in Figure 2(a). First, to perform the AI inference without image shrinking, the input image is divided, and the AI inference is performed on each divided image. This enables detecting small objects. In parallel, the AI inference in the shrunk whole image is also done to detect large objects that straddle the divided images. The final detection results are output by combining the results obtained from the divided images and those obtained from the whole shrunk image. As a result, both small and large objects can be detected in 4K video. In addition, all detection processes can be executed independently of each other, which enables parallel execution with hardware.

(2) NTT-original AI inference engine

The high-definition technology mentioned above requires performing inferences from a large number of divided images for 4K video, significantly increasing the computational complexity. This makes real-time processing difficult even when parallel calculations are performed using hardware. Therefore, the NTT-original AI inference engine (Figure 2(b)) reduces the computational complexity while ensuring detection accuracy by applying our technologies such as improved computing efficiency using inter-frame correlation and dynamic bit-precision control. This achieves 4K real-time AI inference at low power.

Figure 2 Technical Points

Figure 2 Technical Points

Outlook

NTT Innovative Devices Corporation plans to commercialize this LSI within fiscal year 2025. NTT laboratories will continue to develop further technologies related to this LSI in order to expand supported inference models and use cases.

[Glossary]

1LSI: Large Scale Integration

2Maximum altitude at which a drone can normally fly, as defined by the Civil Aeronautics Act in Japan.

3

https://ntt-research.com/ntt-to-showcase-global-research-innovation-at-upgrade-2025/

4YOLO: You only look at once

About NTT

NTT contributes to a sustainable society through the power of innovation. We are a leading global technology company providing services to consumers and businesses as a mobile operator, infrastructure, networks, applications, and consulting provider. Our offerings include digital business consulting, managed application services, workplace and cloud solutions, data center and edge computing, all supported by our deep global industry expertise. We are over $92B in revenue and 330,000 employees, with $3.6B in annual R&D investments. Our operations span across 80+ countries and regions, allowing us to serve clients in over 190 of them. We serve over 75% of Fortune Global 100 companies, thousands of other enterprise and government clients and millions of consumers.

Media contact

NTT IOWN Integrated Innovation Center

Public Relations

Inquiry form

Information is current as of the date of issue of the individual press release.

Please be advised that information may be outdated after that point.

NTT STORY

WEB media that thinks about the future with NTT