Microsoft ends support for Internet Explorer on June 16, 2022.

We recommend using one of the browsers listed below.

- Microsoft Edge(Latest version)

- Mozilla Firefox(Latest version)

- Google Chrome(Latest version)

- Apple Safari(Latest version)

Please contact your browser provider for download and installation instructions.

November 7, 2025

NTT, Inc.

Establishment of Technology for Automatic Evaluation of Remote Monitoring Video Quality for Level 4 Autonomous Driving

~Achieved standardization of "Parametric object-recognition-ratio-estimation model" under the new ITU-T Recommendations P.1199~

News Highlights:

- NTT has established Parametric object-recognition-ratio-estimation model1, a technology that automatically estimates whether remote monitoring video and vehicle-related information transmitted from autonomous vehicles to the remote control room are of sufficient quality to reliably detect objects suddenly appearing in front of the vehicle.

- ITU-T SG122 has verified the high accuracy of the Parametric object-recognition-ratio-estimation model and adopted it as the new ITU-T Recommendations P.1199 in November 2025.

- By incorporating this technology into remote monitoring systems for autonomous driving, it is expected to enhance safety by generating alerts if the quality of remote monitoring video deteriorates and safe supervision becomes impossible, contributing to the realization of safe autonomous driving.

TOKYO - November 7, 2025 - NTT, Inc. (Headquarters: Chiyoda-ku, Tokyo; President and CEO: Akira Shimada; hereinafter "NTT") has established Parametric object-recognition-ratio-estimation model, a technology that estimates whether remote monitoring video transmitted from autonomous vehicles to a remote control room is of sufficient quality to reliably detect objects suddenly appearing in the vehicle's path. With this technology, providers of remote monitoring systems can determine in real time whether the video quality allows operators to monitor safely. This makes it possible to issue alerts when safe remote monitoring of autonomous vehicles is compromised, contributing to the realization of safe autonomous driving.

This technology was recommended by ITU-T SG12, a standardization body, in November 2025 (https://www.itu.int/rec/T-REC-P.1199-202510-P/en).

Background

To alleviate traffic congestion and driver shortages, research and development of autonomous driving systems are progressing. At Level 5 autonomous driving, vehicles achieve safe operation by using diverse sensor data to detect pedestrians, oncoming vehicles, and other objects. However, simply implementing object detection technology3, which analyzes sensor data to detect pedestrians, oncoming vehicles, and other surrounding objects, is insufficient for complete object detection and makes fully safe autonomous driving difficult to achieve.

Therefore, at Level 4 autonomous driving4 (specific automated driving), the installation of a remote control room and a designated remote operator is mandated by traffic regulations. In addition, the enforcement regulations require that if video transmitted from autonomous vehicles is unclear or cannot be properly transmitted and received, the remote operator must be notified.

Challenges

Current remote monitoring systems do not have criteria to determine whether video transmitted from autonomous vehicles is clear enough for effective monitoring. Remote monitoring video is received from autonomous vehicles via wireless communication. When wireless quality deteriorates, the available network bandwidth for transmitting video decreases, which can cause a reduction in video bitrate and packet loss.

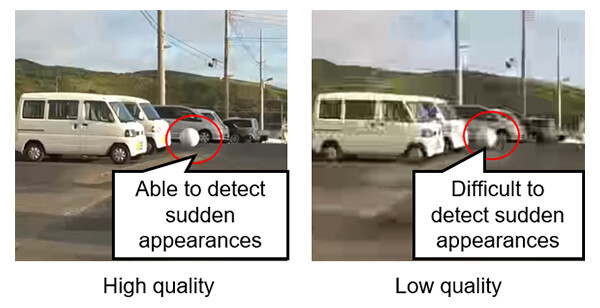

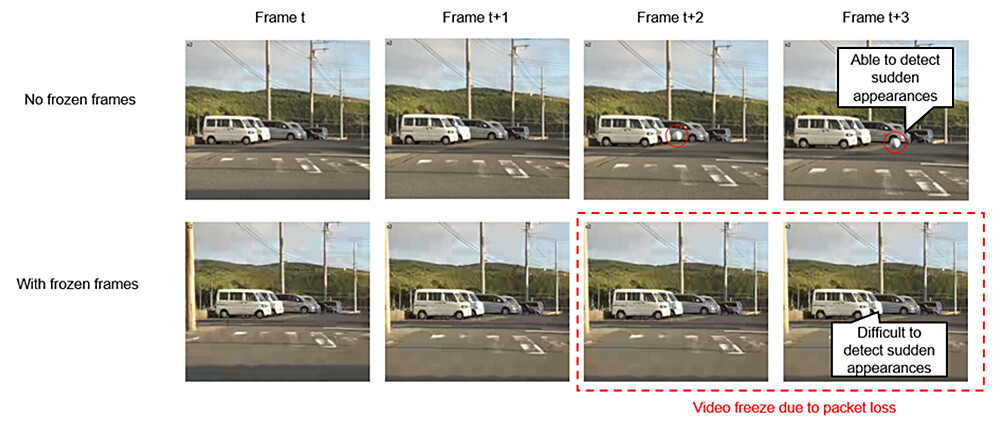

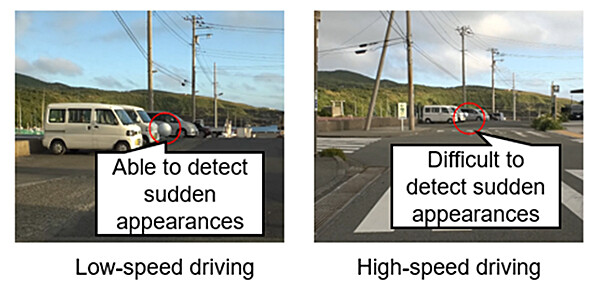

A decrease in bitrate leads to lower video quality, making it more difficult for remote operators to detect objects suddenly appearing in front of the autonomous vehicle (Figure 1). In addition, packet loss can cause video frames to freeze, which also makes it harder to recognize suddenly appearing objects (Figure 2). However, it had not been clear how much video quality degradation or increase in frozen frames5 leads to the point where remote operators are unable to detect such objects.

Figure 1 Impact of decreased video quality on the recognition of suddenly appearing objects

Figure 1 Impact of decreased video quality on the recognition of suddenly appearing objects

Figure 2 Impact of the number of frozen video frames on the recognition of suddenly appearing objects

Figure 2 Impact of the number of frozen video frames on the recognition of suddenly appearing objects

In addition, autonomous vehicles adjust their driving speed according to surrounding conditions. When the driving speed changes, the distance between the vehicle and the objects that need to be detected also changes. At higher speeds, the vehicle travels a longer distance per unit of time compared to lower speeds. Therefore, if the video is not clear, notifications to the remote operator must be delivered more quickly.

As a result, when the vehicle is moving at a higher speed, objects need to be detected from a greater distance, making it more difficult to recognize objects suddenly appearing in front of the vehicle (Figure 3). However, the impact of changes in driving speed on the detection of such objects had not yet been clarified.

Figure 3 Impact of driving speed on the recognition of suddenly appearing objects

Figure 3 Impact of driving speed on the recognition of suddenly appearing objects

Research Achievements

NTT has established Parametric object-recognition-ratio-estimation model, a technology that estimates whether remote monitoring video and vehicle-related information transmitted from autonomous vehicles to a remote control room are of sufficient quality for remote operators to detect objects suddenly appearing in front of the vehicle. This technology has been adopted as ITU-T Recommendations P.1199.

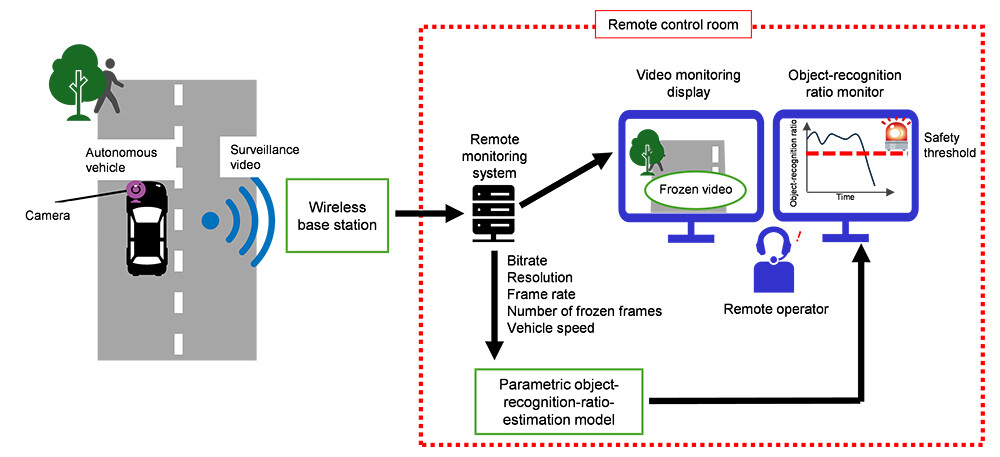

By applying this technology in the remote control room, alerts can be generated when the video displayed on monitoring screens drops below a quality level that prevents the detection of suddenly appearing objects or when the number of frozen video frames increases. This allows remote operators to immediately notice short-term video freezes or quality degradation that might otherwise be overlooked. Consequently, measures such as slowing down or stopping autonomous vehicles can be taken, contributing to the realization of safe autonomous driving (Figure 4).

Figure 4 Example application of this technology

Figure 4 Example application of this technology

Technical Highlights

Parametric object-recognition-ratio-estimation model

This technology formalizes the relationship between information about remote monitoring video and subjective evaluation characteristics of object recognition as a mathematical algorithm, establishing Parametric object-recognition-ratio-estimation model. Results from subjective evaluation experiments derived the relationship between the following factors and the ability to detect objects suddenly appearing in front of autonomous vehicles:

- Information encoded in the video according to network bandwidth (bitrate, resolution, frame rate)

- Number of frozen video frames due to packet loss

- Vehicle information, specifically driving speed

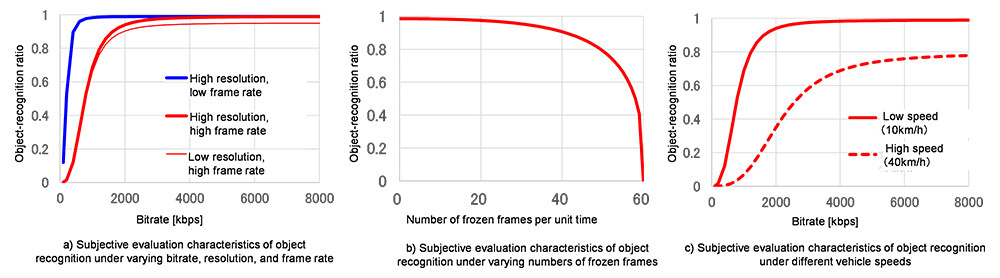

In the subjective evaluation experiments, object-recognition ratio were obtained under numerous conditions with varying encoded video information (bitrate, resolution, frame rate), numbers of frozen frames, and driving speeds. These results were used to derive the subjective evaluation characteristics.

- Impact of reduced bitrate, resolution, and frame rate on object-recognition ratio

When wireless quality deteriorates, the bitrate decreases. If this reduction causes lower resolution and frame rate, video quality decreases as shown in Figure 1. This makes objects suddenly appearing in front of the vehicle less clear and makes it difficult for remote operators to detect them. - Impact of increased packet loss on object-recognition ratio

When wireless quality deteriorates and packet loss occurs, the number of frozen video frames increases. As shown in Figure 2, this causes the monitoring video to freeze, making it difficult for remote operators to recognize suddenly appearing objects. - Impact of driving speed on object-recognition ratio

At higher speeds, the vehicle must detect objects from a greater distance. As shown in Figure 3, this makes it more difficult for remote operators to recognize objects suddenly appearing in front of the vehicle.

Based on the results of subjective evaluation experiments, the effects of these factors on object-recognition ratio were derived as the subjective evaluation characteristics shown in Figure 5(a–c).

Figure 5 Subjective evaluation characteristics of object recognition with respect to bitrate, resolution, frame rate, number of frozen video frames, and driving speed

Figure 5 Subjective evaluation characteristics of object recognition with respect to bitrate, resolution, frame rate, number of frozen video frames, and driving speed

Detailed information about this technology is available at <>. By applying this technology to remote monitoring systems as shown in Figure 4, based on this Recommendation document, it is possible to enhance the safety of remote monitoring.

Future Developments

This technology enables real-time assessment of whether remote monitoring video transmitted from autonomous vehicles to a remote control room is of sufficient quality for object recognition, thereby contributing to the improvement of autonomous driving safety. Going forward, field trials will be conducted using this technology to verify that issuing alerts when video quality deteriorates enhances the monitoring efficiency of remote operators.

[Glossary]

1Object-recognition ratio

The percentage of objects suddenly appearing in the video that a remote operator can recognize in time to prevent a collision with the autonomous vehicle.

2ITU-T SG12

International Telecommunication Union Telecommunication Standardization Sector (ITU-T) Study Group 12 (SG12) is the expert group responsible for the development of international standards (ITU-T Recommendations) on performance, quality of service (QoS) and quality of experience (QoE). This work spans the full spectrum of terminals, networks and services, ranging from speech over fixed circuit-switched networks to multimedia applications over mobile and packet-based networks.

3Object detection technology

Technology that acquires and analyzes forward-facing video from sensors installed on an autonomous vehicle to detect pedestrians, oncoming vehicles, and other surrounding objects.

4Autonomous driving level

Autonomous driving levels are defined based on the degree of driver involvement. At Level 4, the system performs all driving tasks under specific conditions. See JASO Technical Paper "Taxonomy and definitions for terms related to driving automation systems for On-Road Motor Vehicles" (issued 2022.4.1) for detailed definitions.

5Number of frozen video frames

The total number of video frames that stop (freeze) due to packet loss.

About NTT

NTT contributes to a sustainable society through the power of innovation. We are a leading global technology company providing services to consumers and businesses as a mobile operator, infrastructure, networks, applications, and consulting provider. Our offerings include digital business consulting, managed application services, workplace and cloud solutions, data center and edge computing, all supported by our deep global industry expertise. We are over $90B in revenue and 340,000 employees, with $3B in annual R&D investments. Our operations span across 80+ countries and regions, allowing us to serve clients in over 190 of them. We serve over 75% of Fortune Global 100 companies, thousands of other enterprise and government clients and millions of consumers.

Media contact

NTT, Inc.

NTT Information Network Laboratory Group

Public Relations

Inquiry form

Information is current as of the date of issue of the individual press release.

Please be advised that information may be outdated after that point.

NTT STORY

WEB media that thinks about the future with NTT