Microsoft ends support for Internet Explorer on June 16, 2022.

We recommend using one of the browsers listed below.

- Microsoft Edge(Latest version)

- Mozilla Firefox(Latest version)

- Google Chrome(Latest version)

- Apple Safari(Latest version)

Please contact your browser provider for download and installation instructions.

Yes or No?

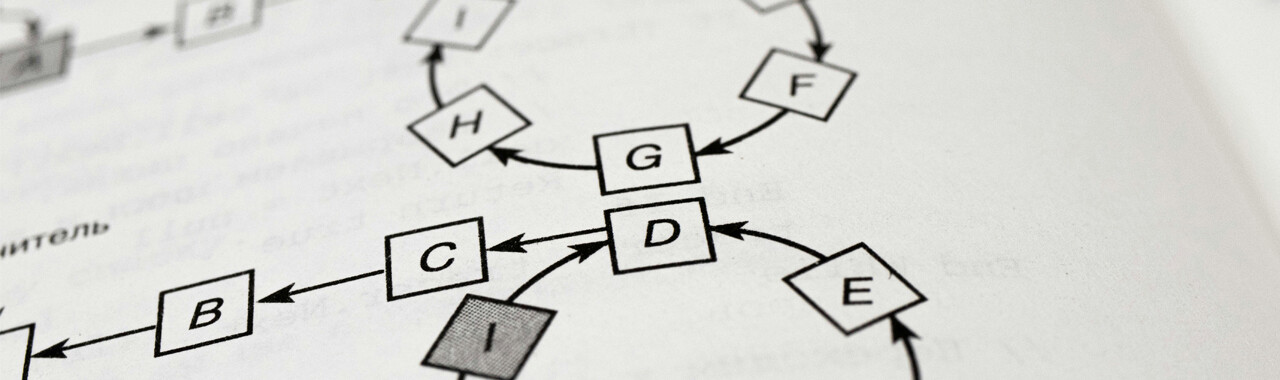

Binary Decision Diagrams (BDDs) are a way to organize and simplify yes/no decisions in computing. Since their development in the 1980s, they have been used in circuit design, network analysis, and artificial intelligence (AI). BDDs help engineers represent logical choices without having to manually evaluate every possible combination. By putting decision paths into a diagram, BDDs allow complex logical relationships to be processed simply and efficiently.

However, while BDDs are widely used, the "worst-case time" complexity of many operations has been unknown—until now.

What are Binary Decision Diagrams?

But let's not get ahead of ourselves. What are BDDs, exactly?

A Binary Decision Diagram is a data structure used to represent and process logical decisions. Imagine a flowchart that organizes different paths a decision might take, using a series of yes/no choices. Instead of listing every possible outcome separately, BDDs streamline decision-making by taking out redundant paths and merging equivalent choices. That makes them very handy whenever complex decisions have to be made quickly and accurately.

They're Really Useful

For example, in circuit design, BDDs help engineers model how a circuit will behave under different conditions. In network analysis, they can represent possible routes for data to travel. When it comes to AI, BDDs help to structure decision-making processes. They have become essential in so many computing applications because of their ability to handle logical structures in a compact form.

But They Have a Major Problem

There's one thing missing, however. Recent studies undertaken by NTT have shown, conclusively, that certain BDD operations take exponentially longer to process as the input size increases. Previously, textbooks—notably Donald Knuth's The Art of Computer Programming (TAOCP)—suggested that some operations might be computable in "polynomial" time, meaning they could be solved reasonably quickly, even with large inputs. NTT's research shows that it's not true; some large-scale operations follow exponential time complexity in the worst case. Because of this proof, TAOCP is due to be updated to correct the misunderstanding in its next edition.

Why Worst-Case Time Complexity Matters

What does it mean?

There is huge value in NTT's research. Understanding worst-case time complexity is important, because it tells us how long an operation might take in the most difficult scenario. To illustrate the point in simple terms, imagine searching for a book in a vast library:

Best case: The book is right in front of you—you find it instantly.

Worst case: The book is in the last row of the highest shelf, forcing you to check every single book before finding it.

In computing, some operations become much, much slower as the input size increases. If a process has exponential time complexity, doubling the input size can make it thousands or even millions of times slower. It's a good thing to know if this might be the case. Knowing how much time operations could take helps programmers avoid inefficient methods when working with large-scale computations.

In the Real World

Knowing the worst-case complexity of BDD operations has major real-world benefits:

Improved software and system design: Engineers can now avoid BDD operations that cause exponential slowdowns, leading to more efficient AI models, network optimizations, and chip designs.

Saving time and costs: Companies working on semiconductors, AI, and large-scale computing can prevent delays and unnecessary expenses by choosing operations that perform better.

Better consumer technology: More efficient computing means faster smartphones, better network speeds, and smoother AI-powered services.

On a human level, it means devices that process tasks more quickly, use less battery power, and deliver a smoother experience. Whether it's AI-powered assistants responding faster, web pages loading quicker, or internet services handling large-scale traffic more efficiently, NTT's research will mean practical improvements in the technology we use daily.

Future Applications and Developments

Now that we know just how long certain computations might potentially take, computer scientists can focus on developing strategies to work around these constraints. Future innovations in computer hardware, AI training, and complex data processing can benefit from a deeper knowledge of BDD efficiency, which can then lead to smarter, faster, and more reliable computing solutions.

For further information, please see this link:

https://group.ntt/en/newsrelease/2025/02/19/250219a.html

NTT—Innovating the Future of Computing

Daniel O'Connor

Daniel O'Connor joined the NTT Group in 1999 when he began work as the Public Relations Manager of NTT Europe. While in London, he liaised with the local press, created the company's intranet site, wrote technical copy for industry magazines and managed exhibition stands from initial design to finished displays.

Later seconded to the headquarters of NTT Communications in Tokyo, he contributed to the company's first-ever winning of global telecoms awards and the digitalisation of internal company information exchange.

Since 2015 Daniel has created content for the Group's Global Leadership Institute, the One NTT Network and is currently working with NTT R&D teams to grow public understanding of the cutting-edge research undertaken by the NTT Group.