Microsoft ends support for Internet Explorer on June 16, 2022.

We recommend using one of the browsers listed below.

- Microsoft Edge(Latest version)

- Mozilla Firefox(Latest version)

- Google Chrome(Latest version)

- Apple Safari(Latest version)

Please contact your browser provider for download and installation instructions.

November 8, 2023

NTT Corporation

An input interface that translates slight residual muscle movements into commands for operation in the metaverse

Toward facilitating rich communication and meaningful connections with society for individuals with severe physical disabilities

Tokyo - Nov. 8, 2023 - NTT Corporation (NTT) has developed an input interface that translates slight residual muscle movements into commands for operations in the metaverse. This innovation aims to facilitate rich communication and meaningful connections with society for individuals with severe physical disabilities. These individuals will now be able to utilize the input of surface electromyography signals, in addition to brain signals and gaze as a technology to augment their physicality in the metaverse and to communicate their intentions.

Avatar and game control using this technology will be exhibited at the NTT R&D FORUM 2023 IOWN ACCELERATION※1.

1. Background

Individuals with severe physical disabilities requiring 24-hour care often spend their time in limited spaces such as their homes or care facilities. Despite their desire for social interaction and participation in society, communication and operation of information and communications technology (ICT) devices pose substantial challenges. Hence, research and development is being pursued worldwide on augmentative and alternative communication (AAC) technologies for individuals with severe physical disabilities to enable them to communicate their intentions. These technologies tend to focus on gaze input and brain signal input such as electroencephalography (EEG) to compensate for the substantial limitations in physical movement caused by muscular atrophy in those with severe physical disabilities.

As part of Project Humanity※2, which aims to solve problems from the perspective of human-centered design including not only individuals with disabilities but also those who support them, NTT is conducting research and development with the goal of creating technologies that will enable individuals with severe physical disabilities to communicate and connect with society in rich ways.

Few studies have been conducted in the AAC technology field. Thus, we focused on surface electromyography (sEMG※3), which is closely related to physical movement, with the aim of augmenting the physicality of individuals with severe physical disabilities into the metaverse. We developed an input interface that utilizes sEMG to translate slight residual muscle movements into commands for operations as a natural means of augmenting their physicality for non-verbal expressions in the metaverse and for operation of ICT devices.

2. Points on the proposed input interface design

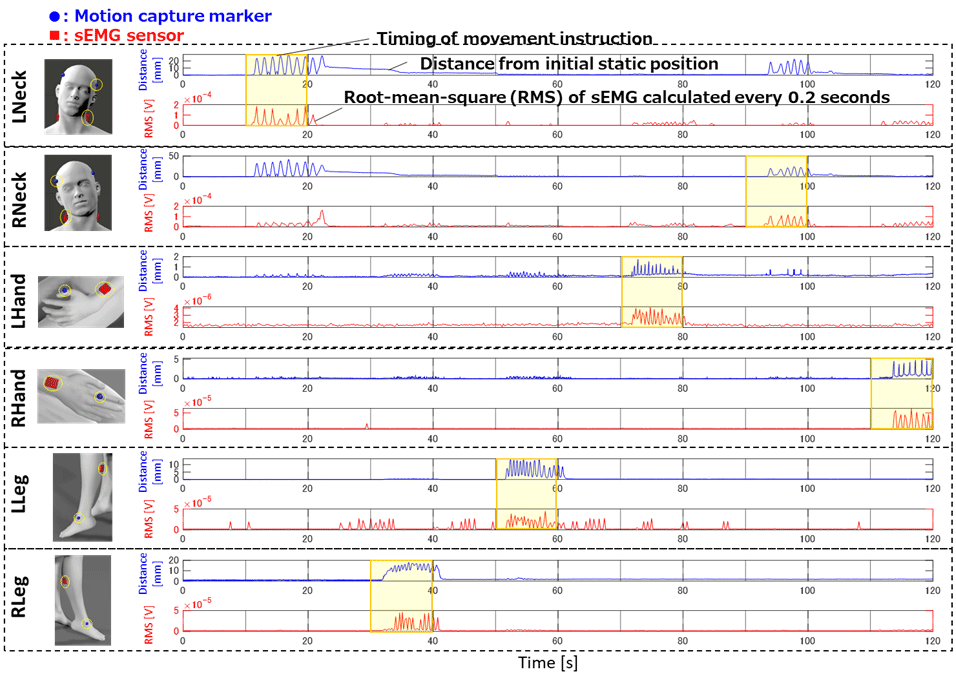

A specific sensor can measure sEMG even if muscles move only slightly. In practice, we measured sEMG and movement for a specific individual living with amyotrophic lateral sclerosis (ALS). This person could move every body part to a limited extent. We observed that sEMG was detected in response to movements of a few millimeters at each attachment point on body parts to a motion capture marker (Figure 1). However, due to individual variations in disabilities, the available body parts are determined based on each individual-specific-disability state and context such as atmosphere and environment.

Figure 1 Time-series changes in the marker position at each body part as the distance from the initial static position (blue line) and the root mean square (RMS) calculated by the sEMG sensor (red line). The yellow highlights indicate the body parts instructed to move

Figure 1 Time-series changes in the marker position at each body part as the distance from the initial static position (blue line) and the root mean square (RMS) calculated by the sEMG sensor (red line). The yellow highlights indicate the body parts instructed to move

Muscles in another body part may sometimes also have responded when this individual attempted to move a specific body part intentionally. The response from muscles in a different body part was observed (as shown in Figure 1) during the interval when the individual moved each of the designated body parts in practice (as shown by the yellow band in Figure 1). The key techniques for isolating the muscles of the intended body part involve calibration, which sets the baseline for the sEMG to the relaxed state of the muscles, similar to when they are at rest, and setting thresholds for detecting muscle contractions tailored to each body part. For an example of DJ performances on stage using this interface by an ALS individual, we set the individual's baseline to a quiet state immediately after music started playing and dynamically adjusted the thresholds to match the muscle straining levels during his performances.

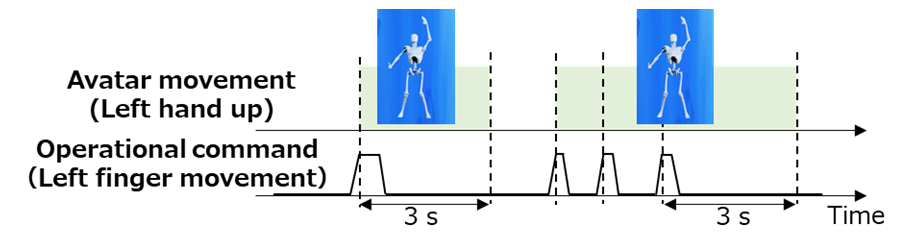

Muscle fatigue is an important consideration when converting continuous sEMG data measured by the sEMG sensors into operational commands for the metaverse. Individuals with severe physical disabilities experience a decline in both muscle strength and endurance, necessitating the achievement of intended operational commands to the metaverse while conserving the movements of body parts. Therefore, we opted to avoid operational commands relying on prolonged muscle contractions or varying strength levels of muscle contractions. Instead, we constructed a policy where each operational command is determined based on the detection of muscle contractions in each body part. Furthermore, we ensured that an avatar's corresponding movement for each operational command is reflected over a consistent period of time. When the same operational command is repeated, the duration of the reflected avatar movement is extended. For example, if an extension of fingers results in an avatar raising its hands in the metaverse, the technology can detect the finger extension and can continue the avatar's hand raising for a certain period of time in response (Figure 2). While it may initially appear to sacrifice realism and effectiveness, the ALS individual realized that his avatar moved in accordance with his intentions through subtle body movements. He felt this led to a natural sense of controlling the avatar through his own bodily sensations. While previous AAC technologies often emphasize operational speed and realistic responses to movements, the concept of conserving movements for individuals with severe physical disabilities represents an entirely new design philosophy that we consider to be groundbreaking.

Figure 2 Avatar movement associated with operational command when the RMS of the sEMG exceeds the threshold value. In the example of a left finger extension, the avatar performs a hand raising motion for 3 seconds after the extension is recognized, and if an additional left finger movement is recognized while the hand is being raised, the avatar's movement is extended by 3 seconds from the recognition time.

Figure 2 Avatar movement associated with operational command when the RMS of the sEMG exceeds the threshold value. In the example of a left finger extension, the avatar performs a hand raising motion for 3 seconds after the extension is recognized, and if an additional left finger movement is recognized while the hand is being raised, the avatar's movement is extended by 3 seconds from the recognition time.

3. Implementation of case studies for metaverse operations

(1) DJ performance through an avatar※4.

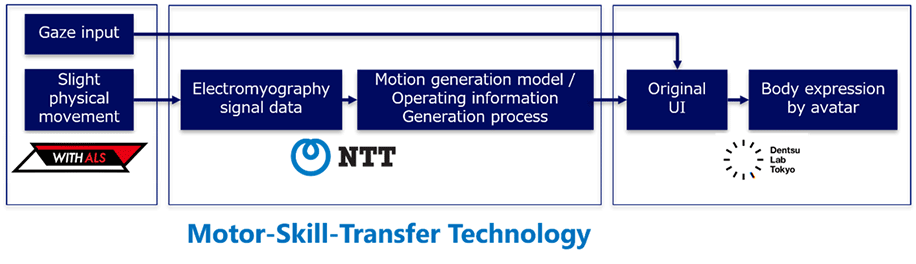

We collaborated with NTT and Dentsu Lab Tokyo (DLT) to develop a system that combines this technology with DLT's gaze input and avatar representation for DJ performances in the metaverse (Figure 3, ※5). With this system, individuals living with ALS can use gaze input to communicate their intentions (types of movement) for how they want the avatar to move, and they can use this technology to reflect the actual avatar's movements. Furthermore, Mr. Masatane Muto, a representative with ALS and also a DJ, showcased a DJ performance remotely from Tokyo to Linz, Austria, on stage in the Ars Electronica Festival 2023 ※6.

Figure 3 System configuration and roles of each company established to achieve a DJ performance

Figure 3 System configuration and roles of each company established to achieve a DJ performance

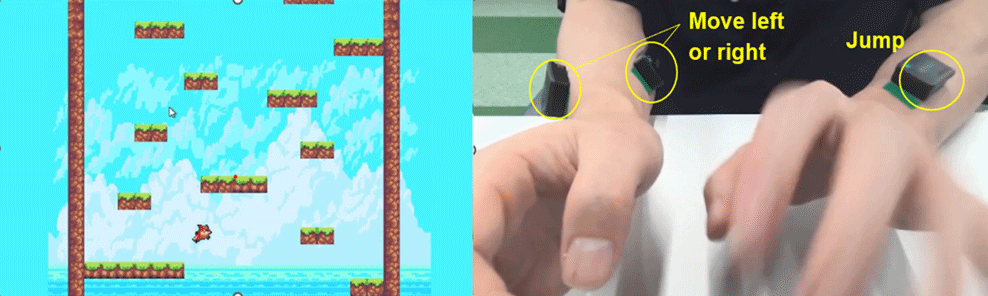

(2) Game Control

This is an implementation example of game control using this technology. We achieved control over in-game characters by enabling avatar commands to be converted into game-specific commands (Figure 4). Among various devices being developed, sEMG sensors hold promise for applications in various fields because these kinds of sensors can enable game control with subtle movements. In particular, enhancing the usability of ICT devices through eSports and fostering connections with people will be crucial elements leading to future societal inclusion.

Figure 4 An implementation example of game control based on the sEMG input using this technology

Figure 4 An implementation example of game control based on the sEMG input using this technology

4. Future

In fiscal year 2024, we aim to enhance the technology so that it is adaptable to varying degrees of disabilities, further expanding into diverse forms of communication expression. We strive to achieve communication that does not emphasize the background of having a disability, both in the metaverse and in real spaces mediated by robots. Additionally, by enabling individuals with severe physical disabilities to pursue self-actualization through rich communication, we will contribute to a future where they can actively participate in society.

※1"NTT R&D FORUM 2023 -IOWN ACCELERATION" official site

URL:https://www.rd.ntt/e/forum/2023

※2We believe developing technologies based on a "human-centered" principle is essential. Project Humanity aims to create a world where people can respect diversity and can understand each other.

※3Action potentials are generated when muscle fibers contract.

※4Released on August 25, 2023: Musician with ALS Performs at Ars Electronica Festival

Experience freedom through his avatar motions synchronized with remaining physical functions

https://group.ntt/en/newsrelease/2023/08/25/230825a.html

※5Released on June 14, 2023: Initiatives for rich communication among ALS symbionts begin

https://group.ntt/en/newsrelease/2023/06/14/230614a.html

※6Dentsu Lab Tokyo's All Players Welcome Enables 'Physical' Expression in Metaverse | LBBOnline

https://www.lbbonline.com/news/dentsu-lab-tokyos-all-players-welcome-enables-physical-expression-in-metaverse

About NTT

NTT contributes to a sustainable society through the power of innovation. We are a leading global technology company providing services to consumers and business as a mobile operator, infrastructure, networks, applications, and consulting provider. Our offerings include digital business consulting, managed application services, workplace and cloud solutions, data center and edge computing, all supported by our deep global industry expertise. We are over $95B in revenue and 330,000 employees, with $3.6B in annual R&D investments. Our operations span across 80+ countries and regions, allowing us to serve clients in over 190 of them. We serve over 75% of Fortune Global 100 companies, thousands of other enterprise and government clients and millions of consumers.

Media contact

NTT Service Innovation Laboratory Group

Public Relations

nttrd-pr@ml.ntt.com

Information is current as of the date of issue of the individual press release.

Please be advised that information may be outdated after that point.

NTT STORY

WEB media that thinks about the future with NTT